What is MLOps?

Updated: Jun 13, 2022

Over the past few decades, businesses have become aware of the importance of data, and how it can help them grow and make more analytical decisions. As a result, the field of machine learning (ML) emerged. A variety of new tools under the umbrella term machine learning operations (MLOps) are constantly being built to aid in the automation of the ML pipeline - from the development phase to the post-deployment phase.

In order to understand MLOps, we first need to better understand why it emerged. So, let’s take a step back and explain the ML lifecycle and its main challenges.

The ML Lifecycle

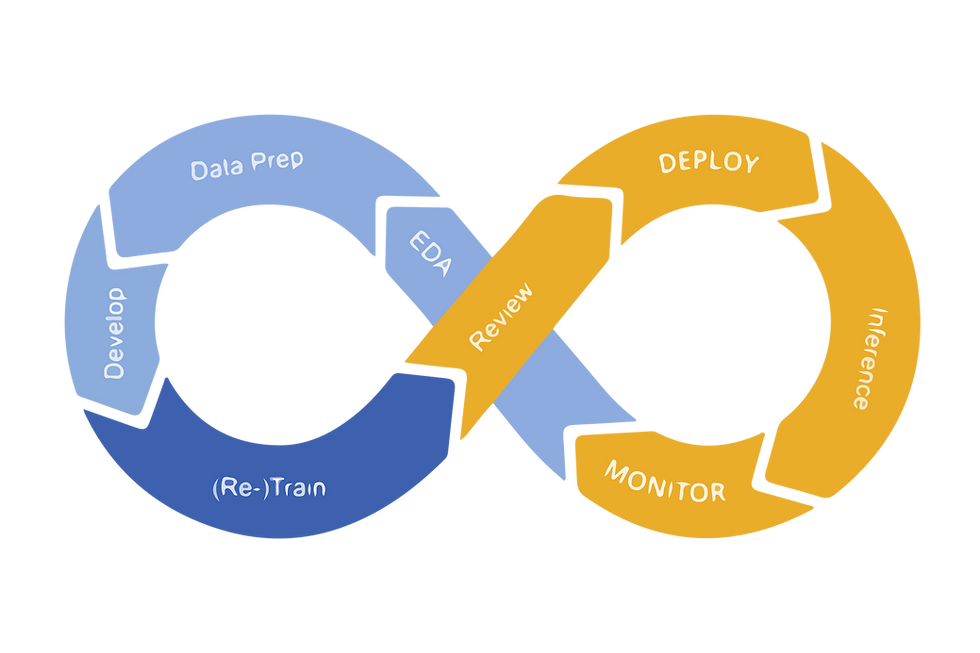

A typical ML lifecycle starts with defining and understanding the problem statement and gathering requirements before getting started on any type of development. Once all stakeholders on the project are on-boarded, the cycle goes through four main stages as shown in Fig 1. below. It begins with data preparation. In this phase, data is gathered, prepared, and cleaned to be used in building the model.

Once the data is ready, it is used in feature engineering, training, and validating the model in the second phase, model development. Once the model is production ready, DevOps/Software engineers are on-boarded. Together, they work on the model deployment phase by successfully provisioning the required infrastructure and integrating the model into the existing environment to the production through APIs.

The monitoring and maintenance phase makes or breaks the success of a machine learning project; data, models, and infrastructures require constant attention from all stakeholders. In some cases, this phase is incorporated with other functionalities such as retraining, experimenting with different models, and evaluating model performance to have the highest performance possible.

Challenges faced in the ML lifecycle

Unlike software lifecycles, the ML lifecycle is still primitive. Today, data scientist practitioners are facing several challenges that hinder the expected fast-pace production of ML models. A few examples of these challenges are:

Difficulty setting up a versioning pipeline for data and models: As is the case with any other system - model experiments along with the data used - need to be version-controlled for reproducibility and tracking.

Productization of the code: Converting the code from an experimental code to a production-ready code that is easily integratable with the existing environment.

Lack of data and model security and governance: Difficulties in creating workflows and approval systems, as well as loss of model and data within the team.

Experimenting different models in training/production: Data scientists prefer to try different models for the same problem. This requires additional repetitive software and DevOps work for each model added.

Having dedicated resources for each project: The existence of one team serving different projects and requirements across the organization makes the process longer, leading to high possibility of project failure.

Scattered responsibility among the team: The cycle requires different skill sets within teams. It requires DevOps, frontend, and backend engineers, as well as data scientists. Having different owners for each functionality can easily create responsibility confusion among stakeholders.

These are only a few challenges - the list is endless. All these problems and more were the reason why MLOps evolved and have become both extremely popular and highly requested. So, the question remains: what is MLOps exactly? And what does it solve?

So, what exactly is MLOps?

The short answer is, MLOps is a set of DevOps practices that streamline the ML lifecycle - starting from the exploratory phase, to the post-deployment phase. This process initially ensures unification of the ML pipeline in terms of scalability, governance, security, maintainability, and automation of the model in development, deployment and post-deployment phases.

The adoption of MLOps into AI-based organizations is quickly becoming standard practice, as it mitigates a lot of challenges faced by data science practitioners and software engineers in their daily operations. Let's dive into MLOps’ main components and their importance.

Main components of MLOps

1. Data Preparation: Preparing and tracking data used in each model and experiment is not as easy a task as many engineers may initially believe. In many cases, the data used across models ends up being lost between different datasets that are not correctly versioned with the model. This gap has been filled with MLOps tools that minimize the manual work required to extract, annotate, and clean the data used in training a model. This ensures the governance and versioning of datasets.

2. Model Development: During model development, the transformed data is fed into the model for training. This training process might require special compute-optimized infrastructure, which has to be set up. Additionally, data scientists/ML engineers experiment with different model configurations to get the best results. This gives rise to the need to experiment tracking in order to keep track of all the configurations, and their corresponding results. To store the models created by the experiments, a model versioning system may also be needed.

3. Model Deployment: One of the key principles of MLOps is the ability to build a deployment pipeline which can easily be integrated into the existing infrastructure.This requires the prediction endpoint to be exposed via web service to ease communication to the model; an auto-scalable infrastructure to handle variable traffic volumes; dashboards to monitor and provide full observability over the model in production; and helpful features that keeps the model performing well such as retraining, experimenting and others.

4. Monitoring and Maintenance: This phase defines the success of any project. If all stakeholders take advantage of dashboards built, they will be able to monitor different parts of the deployment, such as: API health, the data statistical trends through statistical analysis, drift warnings, and model performance. In doing so, should there be any model degradation, adequate action can be taken in time. In some tools used, the dashboards are supported with some secondary functionalities such as retraining, challenging/canary model to experiment models in production environment, rollback between model versions, workflow approvals, and much more that helps sustain a model in production.

Impact of MLOps on organizations

Incorporating MLOps in organizations positively impacts the work of all stakeholders in an AI project. Data scientists are left to focus on building great models and analyzing its performance in production. Software/DevOps engineers are released from all the repetitive work of creating dashboards, allocating and monitoring the infrastructures and manually scaling up/down the resources due to model surges. Business teams are the happiest since they are getting almost real time changes when requested, as well as well-performing models, which reflects positively on their numbers.

Different tools are being built these days with the aim of making the life of all stakeholders in a data science project easier. Some MLOps tools are aiming to focus on a certain phase and resolve its problems, and others are being built to address the main problems across the cycle.

Konan: Machine Learning Deployments Made Easy!

Being a Data Science and AI company, we’ve found the need for integrating MLOps in our own ML lifecycle. We’ve created Konan, an MLOps platform focused on deploying, monitoring and maintaining your ML models. Essentially, we’re aiming to make the Production Phase of the machine learning lifecycle as hassle-free as possible. Here’s a sample of what Konan offers:

Automatic provisioning and maintenance of infrastructure;

Automatic API creation with the best security practices;

Monitoring of everything that goes into and out of your model;

Insight over your model’s performance over time;

Model versioning;

Retraining your models in a pinch;

And more…

Head over to Konan’s page on our website to know more or sign up for a free trial.

Comments